Wait... Is 🧠 AI 👑 king?

For this second issue, we've got lots of questions about AI... Do you have the answers? Let's chat...

💬 CONVERSATIONS STARTERS

Here are some questions we’d like to explore with you in our second issue of 👑 Content Is Not King:

💥 Dear creators, is AI going to take your job? Or is it a tool for success?

Are creators scared?

Are platforms working with creators or against creators?

🇺🇳 Why is AI a top priority at the United Nations?

Should we be worried when global experts are worried?

Why are Amazon, Anthropic, Google, IBM, Meta, Microsoft, Nvidia, and OpenAI partnering with the US Department of State?

💯 Authenticity and trust… What’s the role of AI?

Authenticity vs. Believability

And what can help promote trust and build confidence?

💥 Dear creators, is AI going to take your job? Or is it a tool for success?

Well, maybe a bit of both! At least that’s what we have heard from the creators we worked with and talked to this past year, mostly in the area of politics and diplomacy.

But let’s dig in a bit more to better understand the role of AI…

At VidSummit 2024 this past September, entrepreneur and creator Tom Bilyeu said that the exponential growth of AI-powered content creation will disrupt the very foundations that creators have built their careers upon — and made this prediction:

❝ The world as you know it, as a content creator will end in two years. In a year from now, it will be indistinguishable from real life. In two years from now, you'll be able to do this anywhere, anytime, anytime, anything. Your ability to do the content better than somebody else … goes away, because the reality is, anybody will be able to have an AI that just generates video after video after video, chasing the algorithm to see what works. ❞

A new study from affiliate marketing platforms Awin and ShareASale shows that only 32% of creators are using artificial intelligence and generative AI. Geographically, the numbers offer a inconsistent picture of actual usage, with 52% of German content creators using AI, but only 19% in the UK and the US. In addition, of those creators using AI, 88% of Germans, 87% of Americans, and 70% of Brits state that making content creation easier is the top reason.

Some interesting takeaways from the study:

47% use AI for creativity: brainstorming content, creating images

51% for optimization: reviewing copy, drafting copy for social media captions

2% for marketing: funnels, replying to comments

According to another recent report by Youtube about Youtube creators, 92% of them are already using generative AI tools.

90% of creators do not feel like they’re using Gen AI to the fullest extent possible

96% are using AI for creative support

47% for editing text, images, audio, or video.

29% say that one of their top 3 reasons for using Gen AI is to save time

28% to generate new ideas

26% to produce higher quality content

Are creators scared?

Of note: the Awin/ShareASale study focused on how the ‘creator burnout’ can be linked to the growth of AI and AI tools, in particular on the threats associated to AI in terms of job loss, business risk.

16% of creators say AI poses a great threat

26% somewhat of a threat

58% minimal or no threat to their business

48% of creators are afraid it will decrease amount of meaningful content

46% are afraid consumers will no longer take content seriously

43% are afraid it puts extra pressure on them to stand out from the crowd and produce unique content that is not AI-generated

Similarly, an earlier 2024 report by creator media firm Raptive showed that, if unregulated, AI could crush a vibrant ecosystem:

1 in 4 creators are worried about the threat of content theft

41% said revenue loss is their top concern

78% feel AI will negatively impact their income

85% of creators feel policymakers could be more effective in tackling concerns

78% of the public support regulation limiting AI if it impacts livelihoods

According to Leslie Miller, Youtube’s VP of Government Affairs and Public Policy, “as we look towards the future, our top priority is protecting our YouTube community. Our Community Guidelines support self expression while shielding viewers from harmful content. It’s what’s allowed us to foster the rich and diverse community that defines YouTube today.”

Youtube’s study also shows that creators are eager to navigate new technology thoughtfully and responsibly:

❝ Creators are eager to navigate new technology thoughtfully and responsibly. They're committed to ethical content creation, with 74% of creators wanting guidelines for responsibly posting Gen A.I. content to social media and video platforms. To make this happen, collaboration is needed across platforms, developers, policymakers, and creators. ❞

Raptive’s study identified five policies to focus on:

Enforce copyright laws to protect intellectual property

Encourage revenue-sharing and licensing regimes for content used to train AI

Require ethical product design for AI-powered search products, which also means not reducing traffic to the content creators who fuel these products

Require a commitment from AI Labs and regulators that future AI products will not be allowed to unfairly compete against content creators

Identify anti-competitive behavior and hold Big Tech accountable

And you, what do you think?

Are platforms working with creators or against creators?

Social media companies are adapting quickly to this new environment.

Meta Connect 2024 this past week showed that Mark Zuckerberg has big ambitions for using AI to help creators, with tools like AI Studio — fully recreating real influencers as AI figures — and AI dubbing for Reels.

“It was pretty wild to watch,” commented Jay Peters on The Verge, about Zuckerberg presenting a live demo of a creator-based AI persona of Don Allen Stevenson III, “which looked like the creator, talked like the creator, and tried to respond to questions like the creator would.”

Google is also mounting its efforts with YouTube announcing this past week new artificial intelligence features for creators on its Shorts platform that tap into Google’s DeepMind video-generation model.

The features, known as Veo, will allow creators to add AI-generated backgrounds to their videos as well as use written prompts to generate stand-alone, six-second video clips. YouTube CEO Neal Mohan said he hopes Veo will enable creators to produce more Shorts videos with the help of AI.

Also, this past week, Cloudflare launched new tools “to helps content creators regain control of their content from AI bots,” becoming — the company said — “the first to stand up for content creators at scale.”

Cloudflare, one of the world’s largest networks underlying the global internet, has began arming creators with what it called the equivalent of a free “easy button” to block all website crawlers with one click, giving them more control over who can access their data, as well as the ability to analyze how their content is used by AI models.

❝ AI will dramatically change content online, and we must all decide together what its future will look like. Content creators and website owners of all sizes deserve to own and have control over their content. If they don’t, the quality of online information will deteriorate or be locked exclusively behind paywalls. ❞

— Cloudflare co-founder and CEO Matthew Prince

Creators and creatives, did you miss this?

AI & Creatives: Leveraging Innovative Tools to Enhance the Creative Economy (with Alvin Bowles, VP of Global Business Group, Americas at Meta, and Tim Fu, Founder of Studio Tim Fu; moderated by Ravi Agrawal, Editor in Chief at Foreign Policy)

Mark Zuckerberg: creators and publishers ‘overestimate the value’ of their work for training AI (Adi Robertson, The Verge)

Artificial Intelligence (AI) Marketing Benchmark Report: 2024 (Influencer Marketing Hub)

How GenAI Changes Creative Work (Angelo Tomaselli and Oguz A. Acar, MIT Sloan Management Review)

AI Tools for Creatives & Freelancers (

in )

🇺🇳 Why is AI a top priority at the United Nations?

But is AI risking to become just a buzz word?

❝ The speed of AI technology development and the breadth of its impact requires diverse policy ecosystems to work more cohesively. And in real-time. ❞

— Amandeep Singh Gill, UN Envoy on Technology

During last' week’s UN General Assembly high level meetings in New York — known as UNGA — leader after leader mentioned AI in their speeches.

🇺🇳 United Nations Secretary-General Antonio Guterres:

❝ The rapid rise of new technologies poses another unpredictable existential risk. Artificial Intelligence will change virtually everything we know — from work, education and communication, to culture and politics.

We know AI is rapidly advancing, but where is it taking us: To more freedom — or more conflict? To a more sustainable world — or greater inequality? To being better informed – or easier to manipulate?

A handful of companies and even individuals have already amassed enormous power over the development of AI — with little accountability or oversight. Without a global approach to its management, artificial intelligence could lead to artificial divisions across the board — a Great Fracture with two internets, two markets, two economies — with every country forced to pick a side, and enormous consequences for all.

The United Nations is the universal platform for dialogue and consensus. […] The global debate happens here, or it does not happen. ❞

🇧🇷 Brazil's President Luiz Inácio Lula da Silva, chair of the G20 in 2024:

❝ In the field of Artificial Intelligence, we are witnessing the consolidation of asymmetries that lead to a true oligopoly of knowledge. An unprecedented concentration is advancing into the hands of a small number of people and companies, based in an even smaller number of countries.

We are interested in an emancipatory Artificial Intelligence, one that also reflects the Global South and strengthens cultural diversity. It must respect human rights, protect personal data, and promote information integrity. And, above all, it should be a tool for peace, not war. ❞

🇺🇸 US President Joe Biden:

❝ We’ll see more technological change, I argue, in the next 2 to 10 years than we have in the last 50 years.

Artificial intelligence is going to change our ways of life, our ways of work, and our ways of war. It could usher in scientific progress at a pace never seen before. And much of it could make our lives better. But AI also brings profound risks, from deepfakes to disinformation to novel pathogens to bioweapons.

[…] It’s with humility I offer two questions.

First: How do we as an international community govern AI? As countries and companies race to uncertain frontiers, we need an equally urgent effort to ensure AI’s safety, security, and trustworthiness. As AI grows more powerful, it must grow also — it also must grow more responsive to our collective needs and values. The benefits of all must be shared equitably. It should be harnessed to narrow, not deepen, digital divides.

Second: Will we ensure that AI supports, rather than undermines, the core principles that human life has value and all humans deserve dignity? We must make certain that the awesome capabilities of AI will be used to uplift and empower everyday people, not to give dictators more powerful shackles on human — on the human spirit.

In the years ahead, there may well be no greater test of our leadership than how we deal with AI. ❞

🇮🇹 Italy’s Prime Minister Giorgia Meloni, chair of the G7 for 2024:

❝ We are witnessing the disruptive advent of generative artificial intelligence, a revolution that raises entirely new questions. Although I am not sure we should call it intelligence: intelligent is the one who asks questions, not the one who provides answers by processing data.

In any case, this is a technology that, unlike any we have seen throughout history, creates a world in which progress no longer optimizes human skills but can replace them, with consequences that risk being dramatic, especially in the job market, further verticalizing and concentrating wealth.

It is no coincidence that Italy wanted this issue to be at the heart of its G7 presidency, because we want to play our part in defining global governance of artificial intelligence, one that can reconcile innovation, rights, labor, intellectual property, freedom of expression, and democracy. ❞

Should we be worried when global experts are worried?

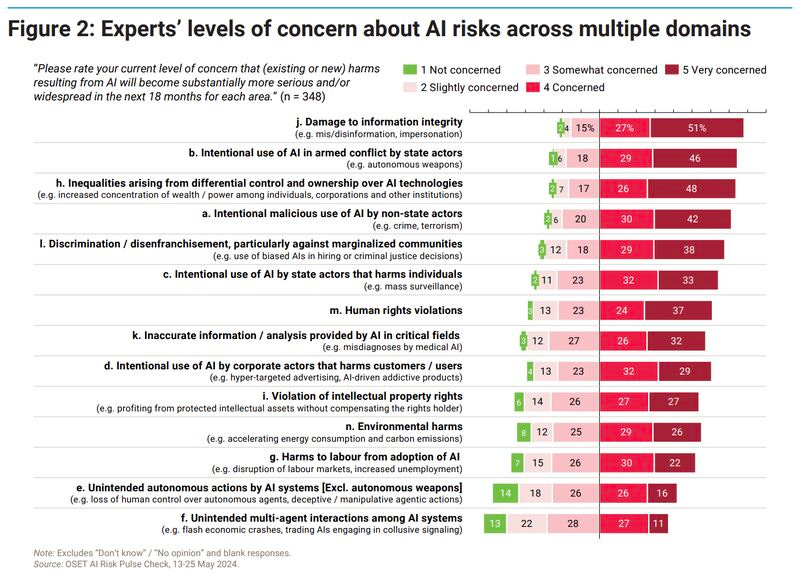

As we mentioned in our first issue last Monday, the United Nations has showed lots of interest around AI. In particular, the recent report by the UN AI Advisory Body shows a high level of concern about AI risks across multiple domains:

Why are Amazon, Anthropic, Google, IBM, Meta, Microsoft, Nvidia, and OpenAI partnering with the US Department of State?

Together, they are committing more than $100 million leveraging their combined expertise, resources, and networks to unlock AI’s potential as a powerful tool for sustainable development and improved quality of life in developing countries, while maintaining an unwavering commitment to safety, security, and trustworthiness in AI systems.

To this end, the new Partnership for Global Inclusivity on AI will focus on three areas:

Compute: increasing access to AI models, compute credit, and other AI tools

Capacity: building human technical capacity

Context: expanding local datasets

❝ The real power of AI doesn’t come from the system itself or the technology in the abstract. It comes from people around the world finding new ways to use the technology to solve hard problems and address the challenges facing their communities. ❞

— Sam Altman, co-founder and CEO of OpenAI

Policy people, did you miss this?

AI governance trends: How regulation, collaboration and skills demand are shaping the industry (Heather Domin, World Economic Forum)

AI Governance in a Complex and Rapidly Changing Regulatory Landscape: A Global Perspective (Esmat Zaidan and Imad Antoine Ibrahim, Nature)

Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence (Judith Pryor, Export-Import Bank of the United States)

AI and Human Rights: A Balancing Act (

in )

💯 Authenticity and trust… What’s the role of AI?

Enter, the “Trust Triangle” — logic, authenticity, empathy.

Digital strategist expert Nick Cicero, Adjunct Professor at S.I. Newhouse School of Public Communications at Syracuse University, recently posted a video on LinkedIn with snippets of a guest lecture for his grad class featuring Carmen Collins, Director of Social at Generac.

“If you go to a marketing conference, you’re going to hear the word authentic a gazillion times,” Carmen told Nick’s students. “I think that thought leaders are not using the word in the right way. Authentic means, are you telling the truth? It doesn’t necessarily mean, are you making a connection?”

She also added that the marketing funnel is dead, because “empathy” — the third point of the “Trust Triangle” — “does not exist in the marketing funnel, empathy is the missing piece in everybody’s marketing strategy, across the board.” Carmen defined empathy as “I feel like you feel,” and not “I see how you feel.”

Lots of food for thought. In particular, how this three points — logic, authenticity, empathy — relate to the current era of AI and AI-generated content and marketing content. Can AI beat the “Trust Triangle” and build the perfect marketing funnel?

Authenticity vs. Believability

In June, Fohr founder and CEO James Nord pointed out: “Increasingly, in the contemporary paradigm of algorithmically-delivered timelines, we are viewing content from creators who we don’t follow and don’t know. So what happens to authenticity in a world of diminishing familiarity? If we cannot judge a post’s authenticity through prior knowledge of the creator, then believability becomes the most important thing — crafting effective hooks, using persuasive language, and keeping viewers entertained long enough to convince them.”

He added: “We can no longer count on the years of trust an influencer has built with their audience and need to continue to make sure the content influencer partners create for a brand is arresting, resonant, and effective.”

And what can help promote trust and build confidence?

The MIT Sloan Management Review and Boston Consulting Group (BCG) asked this same question to an international panel of AI experts that includes academics and practitioners to help us gain insights into how responsible artificial intelligence (RAI) is being implemented in organizations worldwide. This is not specifically related to content and marketing, but it seems to fit this conversation quite well.

🦾 What’s happening at OpenAI?

This past week OpenAI Chief Technology Officer Mira Murati resigned from the company. Chief Research Officer Bob McGrew and another research leader, VP of research Barret Zoph, also resigned.

These exits were the latest in a string of recent high-profile departures that also include the resignations of OpenAI co-founder Ilya Sutskever and safety team leader Jan Leike in May.

OpenAI is discussing a new corporate structure, removing its non-profit status and proceeding instead as a for-profit benefit corporation — like rival AI company Anthropic. This would include a massive new funding round at a $150 billion valuation, and a 7% stake to Sam Altman.